Guidelines for the TREC-2002 Video Track

(last updated: )

1. Goal

Promote progress in content-based retrieval from digital video via open,

metrics-based evaluation.

2. Tasks

There are three main tasks with tests associated and participants in the Video Track must complete at least one of

these three:

- shot boundary determination

- feature extraction

- search

There is one voluntary auxilliary task with no direct test

involved:

- extraction of search test set features for donation

Many systems will accomplish the auxilliary task by running

part of their search system on the search test set early

enough to produce feature output to share. Or they may

run a stand-alone feature extraction system for the same

purpose.

The feature extraction, search, and auxilliary tasks come

in two flavors, depending on whether system development and

processing:

- A - use knowledge of the search test set

- B - do not use such knowledge

Each feature extraction task submission, search task submission,

or donation of extracted features must declare its type: A or B.

Search submissions of type B cannot make use of feature donations

of type A. Search submission of type A can make use of feature

donations of either type.

Available feature donations:

In both the feature extraction and search tasks, systems may be

compared within and/or across types.

Available keyframe donation:

The team at Dublin City

University has selected a keyframe for each shot from the common shot

reference in the search test collection and these are available for download.

2.1 Shot detection:

Identify the shot boundaries with their location and

type (cut or gradual) in the given video clip(s) -

2.2 Feature extraction:

Various semantic features concepts such as "Indoor/Outdoor", "People",

"Speech" etc. occur frequently in video databases. The proposed task

has the following objectives:

-

To begin work on a benchmark for evaluating the effectiveness of

detection methods for semantic concepts

-

To allow exchange of feature detection output based on the TREC Video

Track search test set prior to the search task results submission date so

that participants can explore innovative ways of leveraging

those detectors in answering the search task queries.

The task is as follows. Given a standard set of shot boundaries for

the feature extraction test collection and a list of feature

definitions, participants will return for each feature the list of at

most the top 1000 video shots from the standard set, ranked according

to the highest possibility of detecting the presence of the

feature. Each feature is assumed to be binary, i.e., it is either

present or absent in the given standard video shot.

Some participants may wish to make their feature detection output

available to participants in the search task. By August 1, these

participants should provide the detection over the search test

collection in the feature

exchange format.

Description of features to be detected:

These descriptions are

meant to be clear to humans, e.g., assessors/annotators creating truth

data and system developers attempting to automate feature detection.

They are not meant to indicate how automatic detection should be

achieved.

If the feature is true for some frame (sequence) within the shot, then

it is true for the shot; and vice versa. This is a simplifaction

adopted for the benefits it affords in pooling of results and

approximating the basis for calculating recall.

-

Outdoors: Segment contains a recognizably outdoor location,

i.e., one outside of buildings. Should exclude all scenes that are

indoors or are close-ups of objects (even if the objects are

outdoor).

-

Indoors: Segment contains a recognizably indoor location, i.e.,

inside a building. Should exclude all scenes that are outdoors or are

close-ups of objects (even if the objects are indoor).

-

Face: Segment contains at least one human face with the nose,

mouth, and both eyes visible. Pictures of a face meeting the above

conditions count.

-

People: Segment contains a group of two more humans, each of

which is at least partially visible and is recognizable as a

human.

-

Cityscape: Segment contains a recognizably city/urban/suburban

setting.

-

Landscape: Segment contains a predominantly natural inland

setting, i.e., one with little or no evidence of development by

humans. For example, scenes consisting mostly of plowed/planted

fields, pastures, orchards would be excluded. Some buildings, if small

features on the overall landscape, should be OK. Scenes with bodies of

water that are clearly inland may be included.

-

Text Overlay: Segment contains superimposed text large enough to be

read.

-

Speech: A human voice uttering words is recognizable as such in

this segment

-

Instrumental Sound: Sound produced by one or more musical

instruments is recognizable as such in this segment. Included are

percussion instruments.

-

Monologue: Segment contains an event in which a single person

is at least partially visible and speaks for a long time without

interruption by another speaker. Pauses are ok if short.

2.3 Search:

Given a multimedia statement of information need and the common shot

boundary reference for the search test collection, return a ranked list

of 100 shots from the standard set, which best satisfy the

need. Please note the following restrictions for this task:

-

The only manually created collection information search systems

are allowed to use will be the video titles, brief descriptions,

and descriptors accessible on the Internet Archive and Open Video

websites.

-

For TREC 2002 the track will set aside the challenging problem of

fully automatic topic analysis and query generation. Submissions will

be restricted to those with a human in the loop, i.e., manual or

interactive runs as defined below. Once we have a better idea what

features make sense for the TREC 2002 video topics and collection,

attempts to automate the extraction of such features can be more

efficiently investigated.

-

Because the choice of features and their combination for search is an

open research question, no attempt will be made in TREC 2002 to

restrict groups with respect to their use of features in

search. However, groups making manual runs must report their queries,

query features, and feature definitions. These might serve as a basis

for a common query language next year, if such a language is

justified.

-

Systems are allowed to use transcripts created by automatic speech

recognition (ASR). But it is recommended that if an X+ASR run is

submitted, then either an ASR-only or X-only run must also be

submitted so that the effect of ASR can be measured. All runs count in

determining how many runs a group submits.

-

Groups submitting interactive runs must include a measure of time

spent in browsing/searching for each search - minutes of elapsed time

from the time the searcher is given the topic to the time the search

effort ends.

Such groups are encouraged to measure user

characteristics and satisfaction as well and report this with their

results, but they need not submit this information to NIST. Here are

some examples of instruments for collection of user

characteristics/satisfaction data developed and used by the TREC

Interactive Track for several years.

3. Framework for the Video Track

The TREC 2002 Video Track is a laboratory-style evaluation that

attempts to model real world situations.

3.1 Search

In the case of the search task, the evaluation attempts to model the

operational situation in which a search system, tuned to a largely

static collection, is confronted with an as yet unseen query - much as

would happen when an end-user with an information need steps up to a

operational search system connected to a large, static video archive

and begins a search.

In this model the system is adapted before the test to the test

collection and to some basic assumptions about what kinds of

information needs it may encounter (e.g., requests for video material

on specific people, examples of classes of people, specific objects,

examples of classes of object and so analogously for events,

locations, etc. - as noted in the track guidelines). The system is at

no point adapted to particular test topics.

In the TREC Video Track the amount of time allowed to adapt the system

to the test collection is a covariant, not an independent

variable. Research groups interested in determining how well a system

adapted solely to data from outside the search test collection

performs, can use the feature extraction development/test collection

or data from the TREC-2001 Video Track rather than the TREC-2002

search test collection to develop their system.

For TREC 2002 the topics will be developed by NIST who will control

their distribution. Once the topics are received and before query

construction begins, the system must be frozen. It cannot be adapted

to the topics/queries. The time allowed for query construction will be

narrowly limited only if the participants want this. Even in this case

the topics will be available in time to give groups considerable

freedom in when they actually freeze their system, construct the

queries, and run the test.

3.2 Feature extraction and shot boundary determination

In the case of feature extraction and shot boundary determination, the

set of features or shot types is known during system development. The

test data themselves are unknown until test time, although high-level

information about it is assumed to be available. Other data, believed

similar enough to the test data to support system training/validation,

are available before the test. Such data could be provided by a

customer for whom the system is being developed, drawn from other

sources of judged similar on the basis of high-level comparison, etc.

-

Total identified collection: 68.45 hrs MPEG-1/VCD from:

- Partitioning of the videos in the identified collection

- Videos for the shot boundary detection task will taken from

outside the total identified collection as described above./li>

The files from the Open Video collection will be used as they exist

on the site in MPEG-1 format. The Internet Archive (IA) videos

available in VCD (MPEG-1) format as they exist on the site in VCD

format. NIST assumes participating groups will be able to download the

files from the IA and OV.

For video data, all experiments will use only the MPEG-1/VCD files

listed in the collection definition. Additional experiments using

other formats are not discouraged, but will be outside the evaluation

framework of the track for 2002.

4.1 Common shot boundary reference:

Here is the common shot boundary reference

for use in the search and feature extraction tasks. The emphasis here

is on the shots, not the transitions.

The shots are contiguous. There are no gaps between them. They do not

overlap. The media time format is based on the Gregorian day time (ISO

8601) norm. Fractions are defined by counting pre-specified fractions

of a second. In our case, the frame rate is 29.97. One fraction of a

second is thus specified as "PT1001N30000F".

TREC video id has the format of "XXX" and shot id "shotXXX_YYY".

The "XXX" is the sequence number of video onto which the video file

name is mapped, this is based on the "collection.xml" file. The "YYY"

is the sequence number of the shot.

The directory contains these file(type)s:

- xxx.mp7.xml - master shot list for video with id "xxx" in

collection.xml

- collection.xml - an xml version of the combined collection lists

- README - info on the files in the directory

- time.elements - info the meaning/format of the MPEG-7 MediaTimePoint and MediaDuration elements

- trecvid2002CommonShotBoundaryRef.tar.gz - gzipped tar file of the

directory contents

4.2 Data for the search task:

NIST randomly choose about 40 hours from the identified collection to

be used as the search test

collection. This is approximately four times the amount of data

used for the search test collection in TREC-2001.

With the exception of the feature extraction test, search task

participants are free to use any video material for developing their

search systems (including the search test collection and the feature

extraction development collection). The sole use of the feature

extraction test set in TREC-2002 is for testing in the feature

extraction task.

4.3 Data for the feature extraction task:

The approximately 30 hours of the identified collection left after the

search test collection has been removed has been designated the

feature extraction collections. It was partitioned randomly into a

feature development (training and validation) collection and a feature

test collection.

The feature development collection will be available for participants

to train their feature extractors for the feature extraction task as

well as feature-based search systems for the search task. With the

exception of the feature extraction test set, feature extraction task

participants are free to use any video (including the search test

collection) for developing their systems. None of the feature extraction test set should in any

way be used to create feature extractors. The sole use of the feature

extraction test set in TREC-2002 is for testing in the feature

extraction task.

4.4 Data for the shot boundary detection task:

The shot

boundary (SB) test collection will be about 5 hours of videos similar to

but from outside the those in the 68 hours of the identified

collection. It will be chosen by NIST and announced in time for

participants to download it but not before.

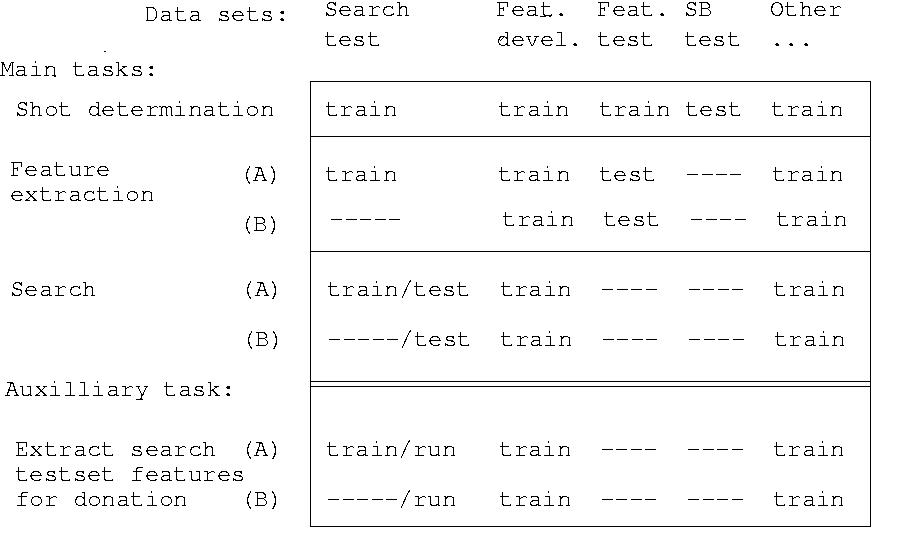

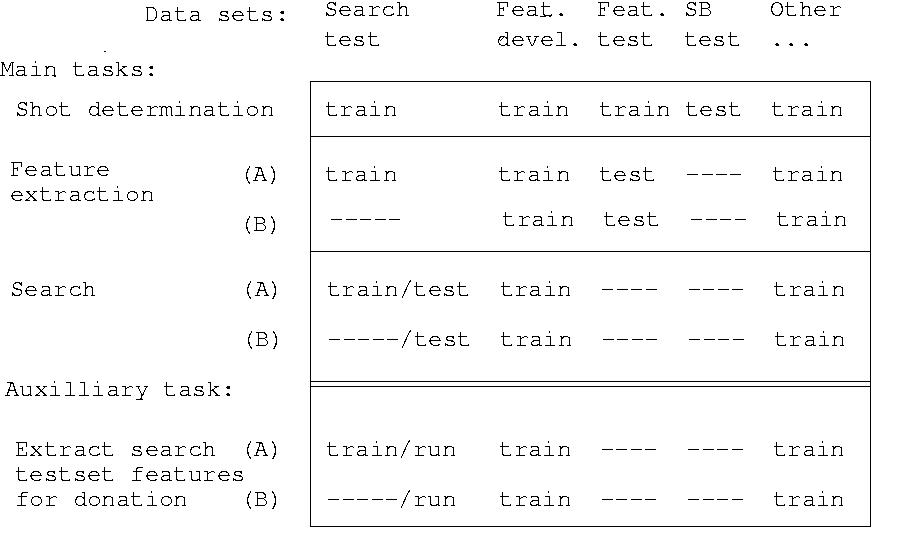

4.5 Summary of tasks and available data

5. Information needs and topics

5.1 Example types of informations needs

I'm interested in video material / information about:

-

a specific person

e.g., I want all the information you have on Ronald Reagan.

-

one or more instances of a category of people

e.g., Find me some footage of men wearing hardhats.

-

a specific thing

e.g., I'm interested in any material on Hoover Dam.

-

one or more instances of a category of things

e.g., I need footage of helicopters.

-

a specific event/activity

e.g., I'm looking for a clip of Ronald Reagan reading a speech about

the space shuttle

-

one or more instances of a category of events/activities

e.g., I want to include several different clips of rockets taking off.

I need to explain what cavitation is all about.

-

other?

5.2 Topics:

-

Describe the information need - input to systems and guide to humans assessing

relevance of system output

-

25 developed by NIST and available here for active participants

-

Multimedia - subject to the nature of the need and the questioner's choice

of expression

-

As realistic in intent and expression as possible - we can imagine a trained

searcher trying to find material for reuse in a large video archive, asking

for this information or video material in this way

-

Template for topic:

-

Title

-

Brief textual description of the information need (this text may contain

references to the examples)

-

Examples* of what is wanted:

-

reference to video clip

- Optional brief textual clarification of the example's relation to the need

-

reference to image

- Optional brief textual clarification of the example's relation to the need

-

reference to audio

- Optional brief textual clarification of the example's relation to the need

* If possible, the examples will come from outside the test

data. They could be taken from various stable public domain

sources. If the example comes from the test collection, the text

description will be such that using a quotation from the test

collection is plausible, e.g., "I want to find all the OTHER shots

dealing with X." A search for a single shot cannot be described with

an example from the target shot.

6. Submissions and Evaluation

6.1 Shot boundary detection

-

Participating groups may submit up to 10 runs. All runs will be

evaluated.

-

The format of submissions will be the same as in TREC 2001. Here is a

DTD for shot boundary

results on one video file, one for results on multiple files,

and a small example of

what a site would send to NIST for evaluation. Please check your

submission to see that it is well-formed

-

Please send your submissions (up to 10 runs) in an email to

[email protected]. Iindicate somewhere (e.g., in the subject

line) which group you are attached to so that we match you up with the

active participant's database.

-

Automatic comparison to human-annotated reference

- Measures:

- All transitions:

for each file, precision and recall for

detection; for each run, the mean precision and recall per reference transition

across all files

-

Gradual transitions only: "frame-recall" and "frame precision" will be

calculated for each detected gradual reference transition. Averages

per detected gradual reference transition will be calculated for each

file and for each submitted run. Details

are available.

6.2 Feature extraction

-

We believe we can judge up to 2 runs. Groups may submit one or two

important runs beyond the maximum of 2 and we will do our best to get

them judged but cannot promise. All runs must be prioritized.

- For each feature, participants will return the list of at most the

top 1000 standard video shots ranked according to the highest

possibility of detecting the feature.

-

Here is a DTD for

feature extraction results of one run, one for results from multiple

runs, and a

small example of what a site would send to NIST for

evaluation. Please check your submission to see that it is well-formed

-

Please send your submission in an email to [email protected]. Indicate

somewhere (e.g., in the subject line) which group you are attached to

so that we match you up with the active participant's database. Send

all of your runs as one file or send each run as a file but please

do not break up your submission any more than that. A run will contain

results for all features you worked on.

-

Performance evaluation of the feature detectors will be performed

using the feature detection test set only. The unit of testing and

performance assessment will be the video shot as defined by the

track's common shot boundary reference. The ranked lists for the

detection of each feature will be assessed manually as follows.

We will take the submitted ranked lists for a given feature and

merge them down to a fixed depth to get list of unique shots. These

will be assessed manually. We will then evaluate each submission

to its full depth based on the results of assessing the merged subsets.

-

If the feature is perceivable by the assessor for some frame

(sequence) however short or long then, then we'll assess it as true;

otherwise false. We'll rely on the complex thresholds built into the

human perceptual systems. Search and feature extraction applications

are likely - ultimately - to face the complex judgment of a human with

whatever variability is inherent in that.

-

If NIST is able to assess all the test set with the

participants' help, then the performance numbers will be computed on

the entire list of shots in the test set.

-

Runs will be compared using precision and recall. Precision-recall

curves will be used as well as a measure which combines precision and

recall (mean) average precision(see below under Search for

details).

6.3 Search

- We believe we can judge up to 4 runs for interactive and manual

searches combined. All runs must be prioritized. Groups may submit

one or two important runs beyond the maximum of 4 and we will do our

best to get them judged but cannot promise.

- Here is a DTD for

search results of one run, one for results from multiple

runs, and a

small example of what a site would send to NIST for

evaluation. Please check your submission to see that it is well-formed

-

Please send your submission in an email to [email protected]. Indicate

somewhere (e.g., in the subject line) which group you are attached to

so that we match you up with the active participant's database. Send

all of your runs as one file or send each run as a file but please

do not break up your submission any more than that. A run will contain

results for all of the topics.

-

Human assessment of each shot as to whether the shot meets the need or

not. The ranked lists for the

detection of each topic will be assessed manually as follows.

We will take the submitted ranked lists for a given topic and

merge them down to a fixed depth to get list of unique shots. These

will be assessed manually. We will then evaluate each submission

to its full depth based on the results of assessing the merged subsets.

The likelihood of finding more of the possible relevant shots will be

increased by the inclusion of interactive runs. A special NIST run,

created by a manual search during topic preparation, will be included

in its entirety among the merged subsets.

- Result set size: 100 shots maximum

- Per-search measures:

- average precision (definition below)

- elapsed time (for interactive runs only)

- Per-run measure:

- mean average precision (MAP):

Non-interpolated average precision, corresponds to the area

under an ideal (non-interpolated) recall/precision curve. To compute

this average, precision average for each topic is first calculated.

This is done by computing the precision after every retrieved relevant

shot and then averaging these precisions over the total number of

retrieved relevant shots in the collection for that topic. Average

precision favors

highly ranked relevant documents. It allows comparison of different

size result sets. Submitting the maximum number of items per result

set can never lower the average precision for that submission.

These topic averages are

then combined (averaged) across all topics in the appropriate set to

create the non-interpolated mean average precision (MAP) for that

set. (See the TREC-10

Proceedings appendix on common evaluation measures for more information.)

7. Milestones for TREC-2002 Video Track

- 14. Feb

- Short application due at NIST. See bottom of Call for Participation

- 1. May

- Guidelines complete (includes definition of search, feature extraction development, and feature extraction test collections)

- 20. May

- Common shot boundary reference for the search test collection available

- 1. Jul

-

ASR transcripts

available from groups willing to share in this MPEG-7 format.

- 22. Jul

- Shot boundary test collection defined

- 1. Aug

- Any donated

MPEG-7 output of feature extraction on the search test collection

due/available in this format.

- 8. Aug

- Search topics available for active participants

- 11. Aug

- Shot boundary detection submissions due at NIST for evaluation.

- 2. Sep

- Feature extraction task submission due at NIST for evaluation. See section 6.2 above for details

- 8. Sep

- Search task submission due at NIST for evaluation. See section 6.3 above for details

- 11. Oct

- Results of evaluations returned to participants

- 3. Nov

- Conference notebook papers due at NIST

- 19.-22. Nov

- TREC 2002 Conference at NIST in Gaithersburg, Md.

8. Results (evaluated submissions and notebook papers for submitters)

The following links lead to information on the results of

the three evaluations. Use the password provided to those who

submitted runs for evaluation.

9. Guideline issues to be resolved

- Can groups planning interactive runs agree on some minimal user satisfaction questions/measures? Here are some examples developed and used by the Interactive Track for several years.

- Others?

10. Contacts:

-

Coordinator:

-

NIST contact:

-

Email discussion list:

-

[email protected]

-

open

archive

-

If you would like to subscribe, logon using the logon you will use to

send mail to the discussion list and to which you would like trecvid

email to be reflected. Send email to [email protected] and include

in the body the following:

subscribe trecvid YOUR FULL NAME

You'll receive instructions on using the list. Basically, you send

your thoughts via email to [email protected] and they are automatically

sent out to everyone who is subscribed to the list.

Last

updated:

Last

updated:

Date created:

Monday, 19-Nov-01

For further information contact Paul Over ([email protected])

Last

updated:

Last

updated: