in the US. If we are unable to read your jpg/pdf files we

will request a faxed copy of your forms.

in the US. If we are unable to read your jpg/pdf files we

will request a faxed copy of your forms.

The main goal of the TREC Video Retrieval Evaluation (TRECVID) is to promote progress in content-based analysis of and retrieval from digital video via open, metrics-based evaluation. TRECVID is a laboratory-style evaluation that attempts to model real world situations or significant component tasks involved in such situations.

In 2006 TRECVID completed the second two-year cycle devoted to automatic segmentation, indexing, and content-based retrieval of digital video - broadcast news in English, Arabic, and Chinese. It also completed two years of pilot studies on exploitation of unedited video (rushes). Some 70 research groups have been provided with the TRECVID 2005-2006 broadcast news video and many resources created by NIST and the TRECVID community are available for continued research on this data independent of TRECVID. See the "Past data" section of the TRECVID website for pointers.

In 2007 TRECVID began exploring new data (cultural, news magazine, documentary, and education programming) and an additional, new task - video rushes summarization. In 2008 that work continued with the exception that the shot boundary detection task was retired and copy detection and surveillance event detection were added.

TRECVID 2009 will test systems on the following tasks:

Changes to be noted by 2008 participants:

A number of datasets are available for use in TRECVID 2009. We describe them here and then indicate below which data will be used for development versus test for each task.

For the search and feature tasks:

The 100 hours used as test data for 2008 (tv8.sv.test) will be combined with 180 hours of new test data (tv9.sv.test) to create the 2009 test set for the search and feature tasks. This will allow us to retest for progress in detection of some of the 2008 features against some of the 2008 test data.

For the copy detection task:

C. Petersohn. "Fraunhofer HHI at TRECVID 2004: Shot Boundary Detection System", TREC Video Retrieval Evaluation Online Proceedings, TRECVID, 2004 URL: www-nlpir.nist.gov/projects/tvpubs/tvpapers04/fraunhofer.pdfCode developed by Peter Wilkins and Kirk Zhang at Dublin City University will be used to format the reference. The method used in 2005/6/8 and to be repeated with the data for 2009 is described here.

Marijn Huijbregts, Roeland Ordelman and Franciska de Jong, Annotation of Heterogeneous Multimedia Content Using Automatic Speech Recognition. in Proceedings of SAMT, December 5-7 2007, Genova, Italy

In order to be eligible to receive the data, you must have have applied for participation in TRECVID. Your application will be acknowledged by NIST with a team ID, and active participant's password, and information about how to obtain the data.

Then you will need to complete the relevant permission forms (from the active participant's area) and email a scanned image (jpg) of each page or a pdf file of the document to In your email include the following:

As Subject: "TRECVID data request"

In the body: your name

your team ID (given to you when you apply to participate)

the kinds of data you are requesting (BBC, S&V, and/or Gatwick)

If you cannot provide a jpg/pdf of the forms, please create a cover

sheet (Attention: Lori Buckland) that identifies you, your team ID,

your email address, and each kind of data (BBC, S&V, and/or Gatwick)

you are requesting. Then fax all pages to  in the US. If we are unable to read your jpg/pdf files we

will request a faxed copy of your forms.

in the US. If we are unable to read your jpg/pdf files we

will request a faxed copy of your forms.

Please ask only for the test data (and optional development data) required for the task(s) you apply to participate in and intend to complete. One permission form will cover 2007, 2008, and 2009 BBC data. One permission form will cover 2007, 2008, and 2009 Sound and Vision data. One permission form will cover the 2008 London Gatwick data to be used for development in 2009. The 2009 London Gatwick test data will be handled separately.

Within a few days after the permission forms have been received, you will be emailed the access codes you need to download the data using the information about data servers in the the active participant's area.

The following tasks are proposed for 2009.

Further information about the tasks is available at the following web sites:

Various high-level semantic features, concepts such as "Indoor/Outdoor", "People", "Speech" etc., occur frequently in video databases. The proposed task will contribute to work on a benchmark for evaluating the effectiveness of detection methods for semantic concepts

The task is as follows: given the feature test collection, the common shot boundary reference for the feature extraction test collection, and the list of feature definitions, participants will return for each feature the list of at most 2000 shot IDs from the test collection, ranked according to the highest possibility of detecting the presence of the feature. Each feature is assumed to be binary, i.e., it is either present or absent in the given reference shot.

All feature detection submissions will be made available to all participants for use in the search task - unless the submitter explicitly asks NIST before submission not to do this.

The descriptions are those used in the common annotation effort. They are meant for humans, e.g., assessors/annotators creating truth data and system developers attempting to automate feature detection. They are not meant to indicate how automatic detection should be achieved.

If the feature is true for some frame (sequence) within the shot, then it is true for the shot; and vice versa. This is a simplification adopted for the benefits it affords in pooling of results and approximating the basis for calculating recall.

NOTE: In the following, "contains x" is short for "contains x to a degree sufficient for x to be recognizable as x to a human" . This means among other things that unless explicitly stated, partial visibility or audibility may suffice.

NOTE: NIST will instruct the assessors during the manual evaluation of the feature task submissions as follows. The fact that a segment contains video of physical objects representing the topic target, such as photos, paintings, models, or toy versions of the topic target, will NOT be grounds for judging the feature to be true for the segment. Containing video of the target within video may be grounds for doing so.

In 2009, participants in the high-level feature task must submit results for 20 features.

For 2009, 10 features from those tested in 2008 will be added to 10 new features selected based on suggestions from the participants in the 2009 high-level feature task during the month of March. A feature must be moderately frequent (not too rare (>100) and not too frequent (<500) in the development collection of ~200 hours), have a clear definition to support annotation and results assessment, and conceivably be of use in searching. Also, we want to avoid overlap with previously used topics/features.

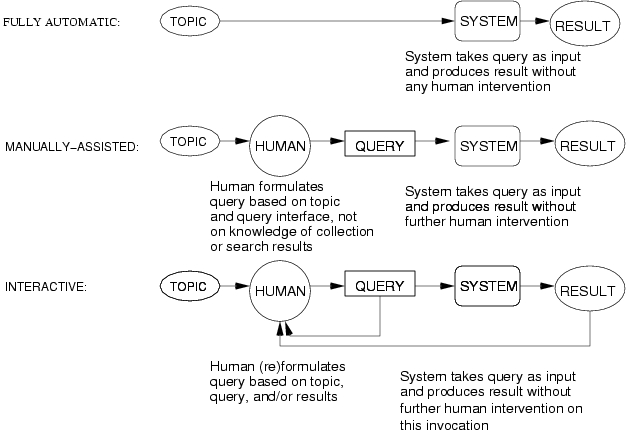

Search is high-level task which includes at least query-based retrieval and browsing. The search task models that of an intelligence analyst or analogous worker, who is looking for segments of video containing persons, objects, events, locations, etc. of interest. These persons, objects, etc. may be peripheral or accidental to the original subject of the video.

The task is as follows: given the search test collection, a multimedia statement of information need (topic), and the common shot boundary reference for the search test collection, return a ranked list of at most N common reference shots from the test collection, which best satisfy the need. For the standard search task, N = 1000. For the high-precision search task N = 10.

NOTE: In the topics, "contains x" is short for "contains x to a degree sufficient for x to be recognizable as x to a human" . This means among other things that unless explicitly stated, partial visibility or audibility may suffice.

NOTE: The fact that a segment contains video of physical objects representing the topic target, such as photos, paintings, models, or toy versions of the topic target, will NOT be grounds for judging the feature to be true for the segment. Containing video of the target within video may be grounds for doing so.

NOTE: When a topic expresses the need for x and y and ..., all of these (x and y and ...) must be perceivable simultaneously in one or more frames of a shot in order for the shot to be considered as meeting the need.

Please note the following restrictions for both forms for the search task:

As used here, a copy is a segment of video derived from another video, usually by means of various transformations such as addition, deletion, modification (of aspect, color, contrast, encoding, ...), camcording, etc. Detecting copies is important for copyright control, business intelligence and advertisement tracking, law enforcement investigations, etc. Content-based copy detection offers an alternative to watermarking. The TRECVID copy detection task will be will be based on the framework tested in TRECVID 2008, which used the CIVR 2007 Muscle benchmark.

Videos often contain audio. Sometimes the original audio is retained in the copied material, sometimes it is replaced by a new soundtrack. Nevertheless, audio is an important and strong feature for some application scenarios of video copy detection. Since detection of untransformed audio copies is relatively easy and the primary interest of the TV community is in video analysis, it was decided to model the required CD tasks with video-only and video+audio queries. However, since audio is of importance for practical applications, there will be an additional optional task using transformed audio-only queries.

For 2009, we plan to require each group to submit at least two runs using video-only queries and two using audio+video queries. Submissions using audio-only queries will be optional. For each kind of run, there will be two application profiles to consider. One will aim to reduce the false alarm rate to 0 and then optimize the probability of miss and the speed. The second will set an equal cost for false alarms and misses. Thus a minimum of 4 runs will be required. Runs on audio-only queries will be optional.

The required system tasks will be as follows: given a test collection of videos and a set of queries, determine for each query the place, if any, that some part of the query occurs, with possible transformations, in the test collection. The set of possible transformations will be based to the extent possible on actually occurring transformations.

Each query will be constructed using tools developed by IMEDIA to include some randomization at various decision points in the construction of the query set. Some manual procedures (e.g. for the camcording transformation) were used in 2008. The automatic tools developed by IMEDIA for TRECVID 2008 are available for download. For each video-only query, the tools will take a segment from the test collection, optionally transform it, embed it in some video segment which does not occur in the test collection, and then finally apply a video transformation the entire query segment. Some queries may contain no test segment; others may be composed entirely of the test segment. Video transformations used in 2008 are documented in the general plan for query creation. and in the final video transformations document with examples.. For 2009 we will use a subset of the 2008 video transformation listed here:

Also we noticed that in some 2008 queries where both "insertion of pattern" and "text insertion" were selected, it made the video extremely crowded, so in 2009 we will try to either decrease the font of the text or choose relatively small patterns.

The audio-only queries will be generated along the same lines as the video-only queries: a set of base audio-only queries is transformed by several techniques that are intended to be typical of those that would occur in real reuse scenarios: (1) bandwidth limitation (2) other coding-related distortion (e.g. subband quantization noise) (3) variable mixing with unrelated audio content. The audio transformations used in 2009 are documented here. The transformed queries will be downloadable from NIST.

The audio+video queries will consist of the aligned versions of transformed audio and video queries, i.e, they will be various combinations of transformed audio and transformed video from a given base audio+video query. In this way sites can study the effectiveness of their systems for individual audio and video transformations and their combinations. These queries will not be downloadable. Rather, NIST will provide a list of how to construct each audio+video test query so that given the audio-only queries and the video-only queries, sites can use a tool such as ffmpeg to construct the audio+video queries.

Please note: Only submissions which are valid when checked against the supplied DTDs will be accepted. You must check your submission before submitting it. NIST reserves the right to reject any submission which does not parse correctly against the provided DTD(s). Various checkers exist, e.g., Xerces-J: java sax.SAXCount -v YourSubmision.xml.

The results of the evaluation will be made available to attendees at the TRECVID workshop and will be published in the final proceedings and/or on the TRECVID website within six months after the workshop. All submissions will likewise be available to interested researchers via the TRECVID website within six months of the workshop.

Information on submissions may be found here.

Information about the evaluation and the measures is available.

We will also evaluate the 10 features from 2008 against the 100 hours of 2008 test data. Those results can be compared to the results for those features in 2008.

Each interactive run will contain one result for each and every topic using the system variant for that run. Each result for a topic can come from only one searcher, but the same searcher does not need to be used for all topics in a run. If a site has more than one searcher's result for a given topic and system variant, it will be up to the site to determine which searcher's result is included in the submitted result. NIST will try to make provision for the evaluation of supplemental results, i.e., ones NOT chosen for the submission described above. Details on this will be available by the time the topics are released.

The measures will be the same for both forms of the search task except that the average precision will be calculated using the lesser of the number of known relevant or the size of the result set (10), which in the high-precision task for almost all topics is expected to be the size of the result set. See Webber et al for a discussion of using average precision versus precision at 10 (P@10).

The following are the target dates for 2009:

Here is a list of work items that must be completed before the guidelines are considered to be final..

Once subscribed, you can post to this list by sending you thoughts as email to [email protected], where they will be sent out to EVERYONE subscribed to the list, i.e., all the other active participants.

Last

updated:

Last

updated: